Overview

Goal: Automatically generate unit tests for your C# code using local LLMs (via Ollama), combining static analysis with AI-driven reasoning.

How-To:

-

Analyzes source code and creates test stubs (using MSTest).

-

Suggests meaningful test cases and expected outcomes using an LLM.

Roslyn for code parsing + Ollama for natural language processing and code creation.

Example Use Case

Let's say we have this method

public int AddNumbers(int a, int b)

{

if (a < 0 || b < 0)

throw new ArgumentException("Numbers must be positive.");

return a + b;

}

Then we run our tool in the CLI like

$> dotnet aitestgen ./MyProject

And we get an output like this

[TestClass]

public class CalculatorTests

{

[TestMethod]

public void AddNumbers_WithPositiveInputs_ReturnsSum()

{

var calc = new Calculator();

Assert.AreEqual(5, calc.AddNumbers(2, 3));

}

[TestMethod]

public void AddNumbers_WithNegativeInputs_ThrowsException()

{

var calc = new Calculator();

Assert.Throws<ArgumentException>(() => calc.AddNumbers(-1, 3));

}

}

The AI inferred the logic, conditions, and likely edge cases.

Remark:

Always ensure that you review the code of the generated classes. It's not enough to have a green light in Test Runner. So you still will have some work to do, you just dont have to manually write boilder plate stuff. But maybe the LLM has not taken all eventualities into account. Or there may be an issue in one of the test methods. After all, LLMs don't work deterministic. Therefore human supervision is essential. You can save yourself a lot of typing, but never give up independent thinking!

Implementation

Roslyn for Code Analysis

Use the Microsoft.CodeAnalysis.CSharp APIs to:

-

Traverse

.csfiles. -

Extract class names, method names, parameter types, and docstrings.

public static class RoslynExtractor

{

public static List<(string ClassName, string MethodName, string Code)> ExtractMethods(string sourceCode)

{

var results = new List<(string, string, string)>();

var tree = CSharpSyntaxTree.ParseText(sourceCode);

var root = (CompilationUnitSyntax)tree.GetRoot();

var classes = root.DescendantNodes().OfType<ClassDeclarationSyntax>();

foreach (var cls in classes)

{

var className = cls.Identifier.Text;

var methods = cls.Members.OfType<MethodDeclarationSyntax>();

foreach (var method in methods)

{

var methodName = method.Identifier.Text;

var methodCode = method.ToFullString();

results.Add((className, methodName, methodCode));

}

}

return results;

}

}

Calling Ollama from C#

For today's implementation we will use the OllamaSharp NuGet package. We could of course also have used the OpenAI package or or or...

public class OllamaClient

{

public async Task<string> GenerateTestsAsync(string nameSpaceOfTestClass,

string nameOfTestClass, string codeOfExtractedMethod, string codeOfWholeClass)

{

try

{

// set up the ollama client via OllamaSharp

var uri = new Uri(Globals.OLLAMA_BASE_URL);

var ollama = new OllamaApiClient(uri);

// select a local model which should be used for AI operations

ollama.SelectedModel = "gpt-oss:20b";

// create chat bot

var chat = new Chat(ollama, Globals.SYSTEM_PROMPT);

var prompt = Globals.USER_PROMPT

.Replace("{$CODE1}", codeOfExtractedMethod)

.Replace("{$CODE2}", codeOfWholeClass)

.Replace("{$CLASSNAME}", nameOfTestClass)

.Replace("{$NAMESPACE1}", nameSpaceOfTestClass)

.Replace("{$NAMESPACE2}", Globals.NAMESPACE_FOR_TEST_PROJECT);

StringBuilder sb = new StringBuilder();

await foreach (var answerToken in chat.SendAsync(prompt))

sb.Append(answerToken);

return sb.ToString();

}

catch (Exception ex)

{

// Handle network / API errors here.

Console.Error.WriteLine($"Error communicating with Ollama: {ex.Message}");

return "";

}

}

}

As you see, the user prompt needs both the method to be tested and the whole class that contains the method. If you don't provide the whole code base the AI would go nuts and assumes many wild guesses f.e. about the constructor.

Both user and system prompt resides within the Globals class. The system prompt looks like this:

You are an expert C# developer that excells at creating unit tests.

When asked for test cases for .NET C# code, you will only create the C# code for MSTest for the test class,

do not decorate it with f.e. "```csharp".

Ensure that the tests are complete and sophisticated.

Include edge cases and and exception tests when relevant.

Only generate the test methods, do NOT include the original method.

Return only valid C# code, with using statements if needed.

If there is the need for special notes include them as C# comments before the C# class definition itself.

Decorate the test class always under any circumstances with "[TestClass]".

Do NOT assume that the original class to be tested has a constructor that takes parameters that are not present

in the original class. ONLY work with constuctor parameters that are present.

In the user prompt we have some stuff that we need to dynamically exchange with details from the C# code:

Generate test cases in MSTest for the C# method that is noted within the <code_to_be_tested> block.

For reference (for example when dealing with constructor related stuff) the code of the whole original class where

the method to be tested originates is available in the <code_of_the_whole_original_class_for_reference> block.

Always inlcude a USING statement for reference to the original class: "using {$NAMESPACE1};"

instead of doing something like "using MyNamespace; // replace with the actual namespace that contains your class".

For the namespace of the generated test class use the name "{$NAMESPACE2}".

For the name of the generated test class use the name "{$CLASSNAME}".

<code_to_be_tested>

{$CODE1}

</code_to_be_tested>

<code_of_the_whole_original_class_for_reference>

{$CODE2}

</code_of_the_whole_original_class_for_reference>

Remark:

We have not established any specific rules regarding naming conventions in our system prompt. For this reason, the LLM used here applies the UnitOfWork_StateUnderTest_ExpectedBehavior pattern developed by Roy Osherove. That's not the only valid pattern for naming conventions, these also have their justification:

BDD-style naming convention like f.e. Given_ProductDoesNotExist_When_AddProduct_Then_ThrowsException

Plain old English with underscores like f.e. Adding_A_Product_That_Doesnt_Exist_Throws_An_Exception

xUnit’s DisplayName like f.e. [Fact(DisplayName="Given the product does not exist, when AddProduct is called, throw an exception")]

When you want other naming conventions to be in place, be shure to include them in your system prompt.

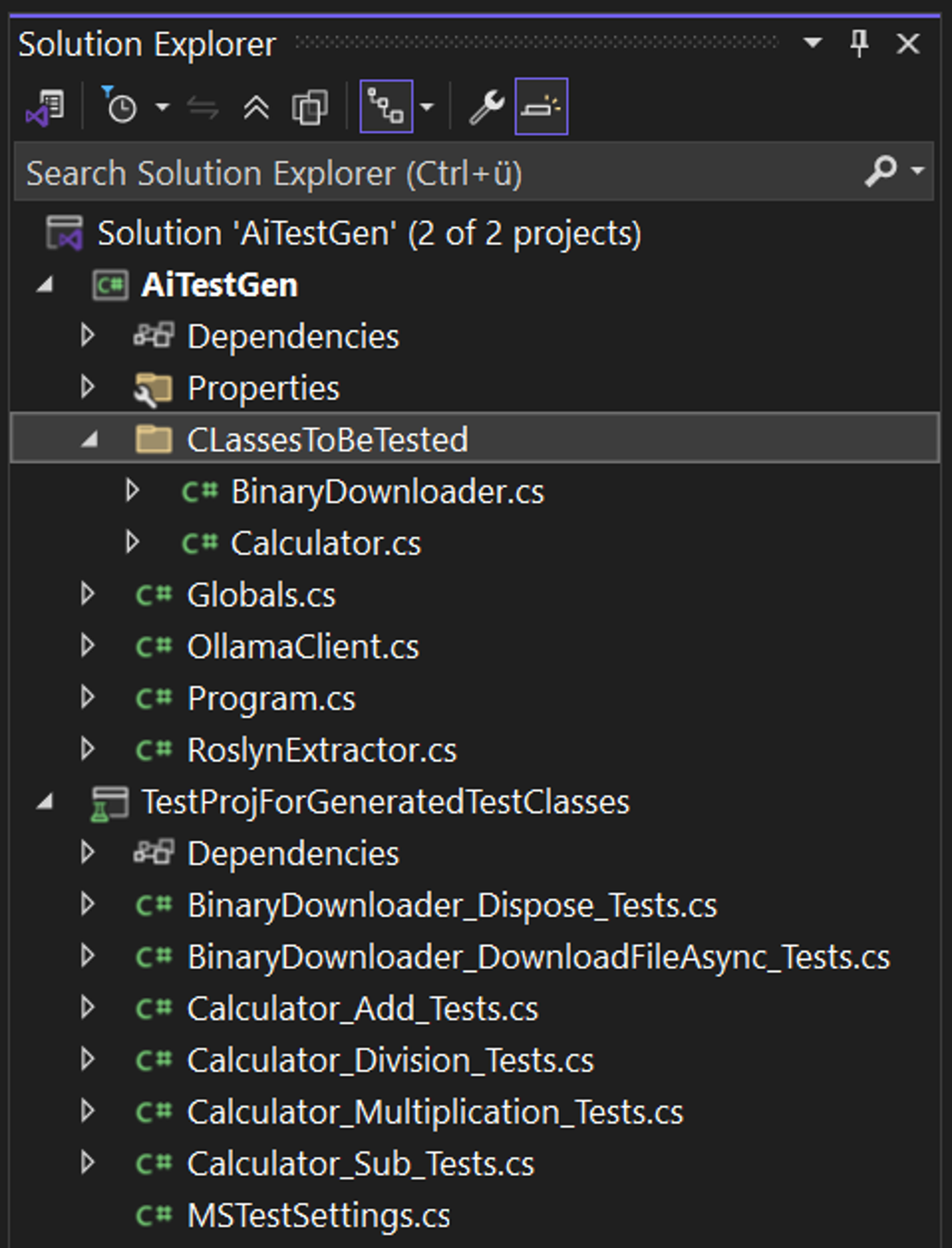

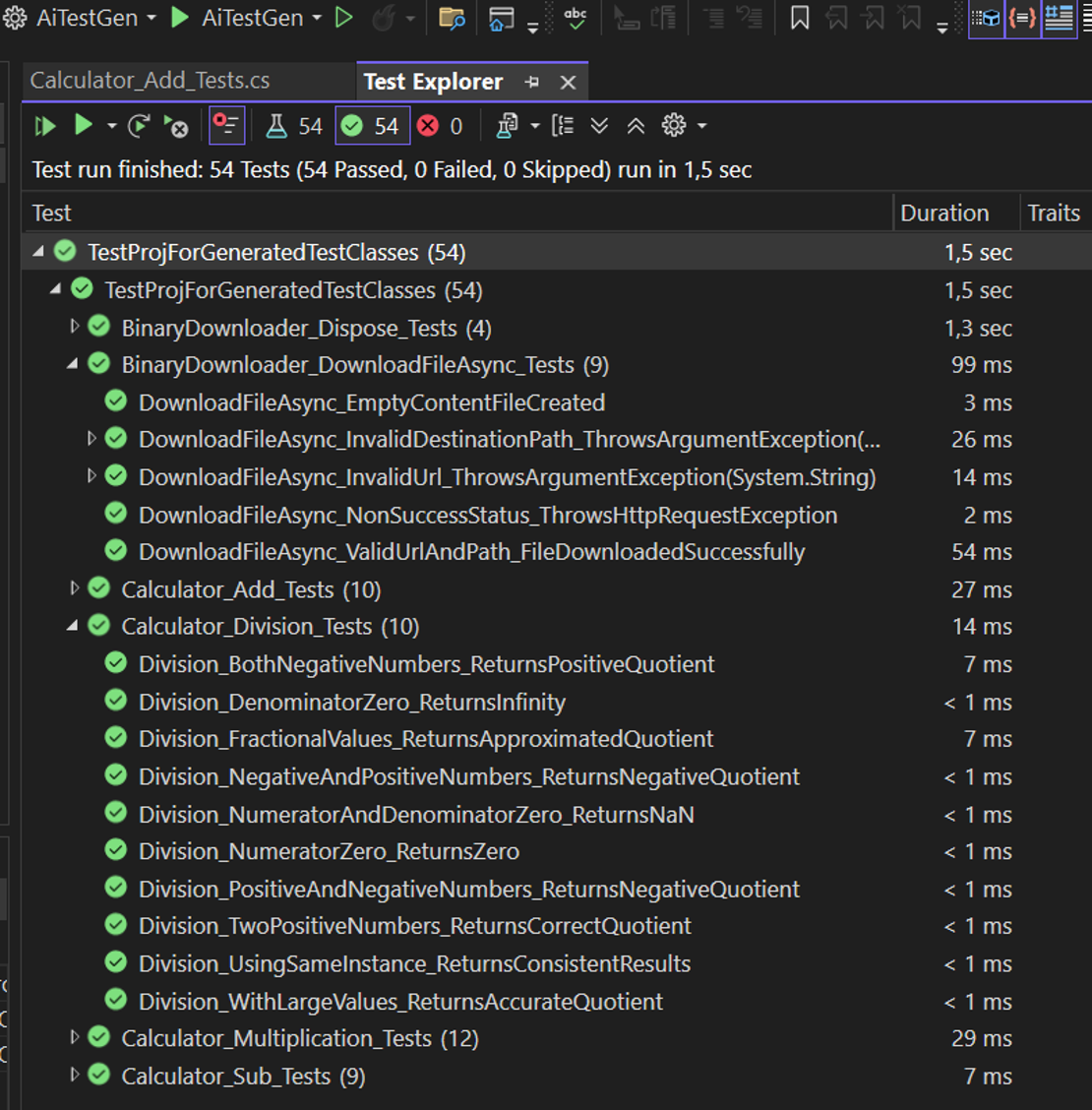

In the sample project we have 2 classes in the project folder ClassesToBeTested that should serve as the classes for which unit tests should be created:

As you may notice, all the test classes in the Test Project TestProjForGeneratedTestClasses have been created from the AI. When executing our console app the created test classes will be placed within the GeneratedTests folder within the bin folder of our console app.

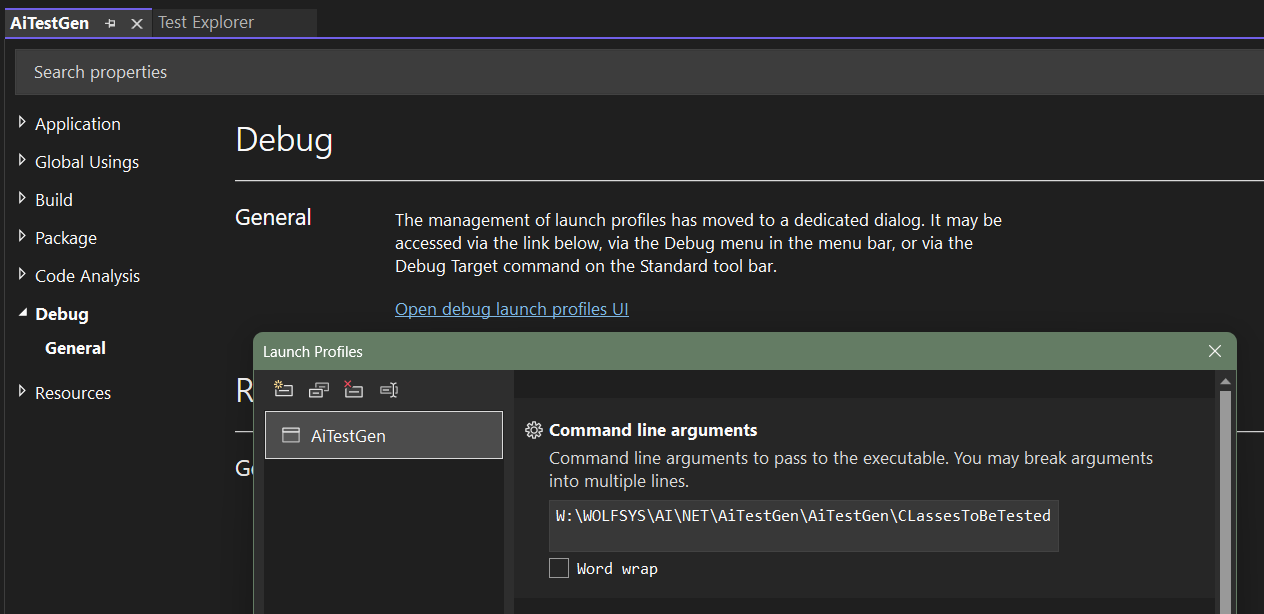

We need to pass the folder containing all the C# classes that require test methods as a command‑line argument to our console app. To help while devoloping, you can set this argument via Project Properties / Debug / Open debug launch profiles UI so you simply can fire up F5 and don't run into an argument exception:

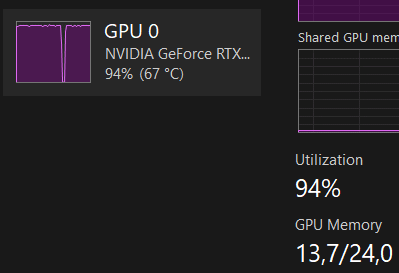

Depending on the number of classes and methods (and of course your hardware) this can take some time while the GPU starts to sweat:

Download

Tech Stack Recap

| Component | Technology |

|---|---|

| Code Parsing | Roslyn (Microsoft.CodeAnalysis) |

| AI Model | Ollama (GPT/Mistral/Phi) |

| Output | MSTest(default) |

| CLI | .NET 9 Console App |

| Formatting | dotnet format or Roslyn Formatter |