Intro

There are plenty of reasons you might want to run a local AI model — whether it's for privacy, performance, saving on cloud costs, or simply out of curiosity.

Over the past year, running local language models has become much more accessible. Today, there are solutions available that offer user-friendly interfaces and support for local inference.

One such tool that’s gaining momentum is LM Studio. This software excels in acceleration, supporting CUDA, OpenCL, cuBLAS, and Metal, which can significantly enhance performance on compatible hardware. The latest version of LM Studio introduces two powerful features:

Headless Mode: You can now run models in the background without a user interface. This is ideal for automated tasks and services.

Vision Language Model (VLM) Support: Thanks to mlx-vlm, LM Studio can now run models that process both images and text, opening up a wide range of multimodal applications.

In addition to these, LM Studio already supported structured outputs through a system called Outlines. In this post, we’ll show how these features can be put to use in a practical, local VLM task: cleaning up the TEMP\Pictures folder.

The Problem

In the TEMP\Pictures folder there are several hundreds image files. Some are just screenshots of captured code ore technical documentations, others are screenshots of new articles while others are just funny memes. And several other images of ... whatever.

Now all of this image files should be sorted into subfolders like

- Articles (screenshots of news articles)

- Memes

- Screenshots (of code and documentation)

- Others (the rest of the files)

Doing this by hand is of course not something a developer strives for. So we need a way to automatically categorize these images in order to organize them.

That's where AI comes to the rescue.

Setting up a Local Language Model

First you will have to download and install LM Studio which provides a nice UI. But you're not stuck to it, you could also download and install Ollama (which comes without an UI out of the box although those do exist, just search the web). Or use any other solution that enabled running local Language Models and which is compatible to the OpenAI API.

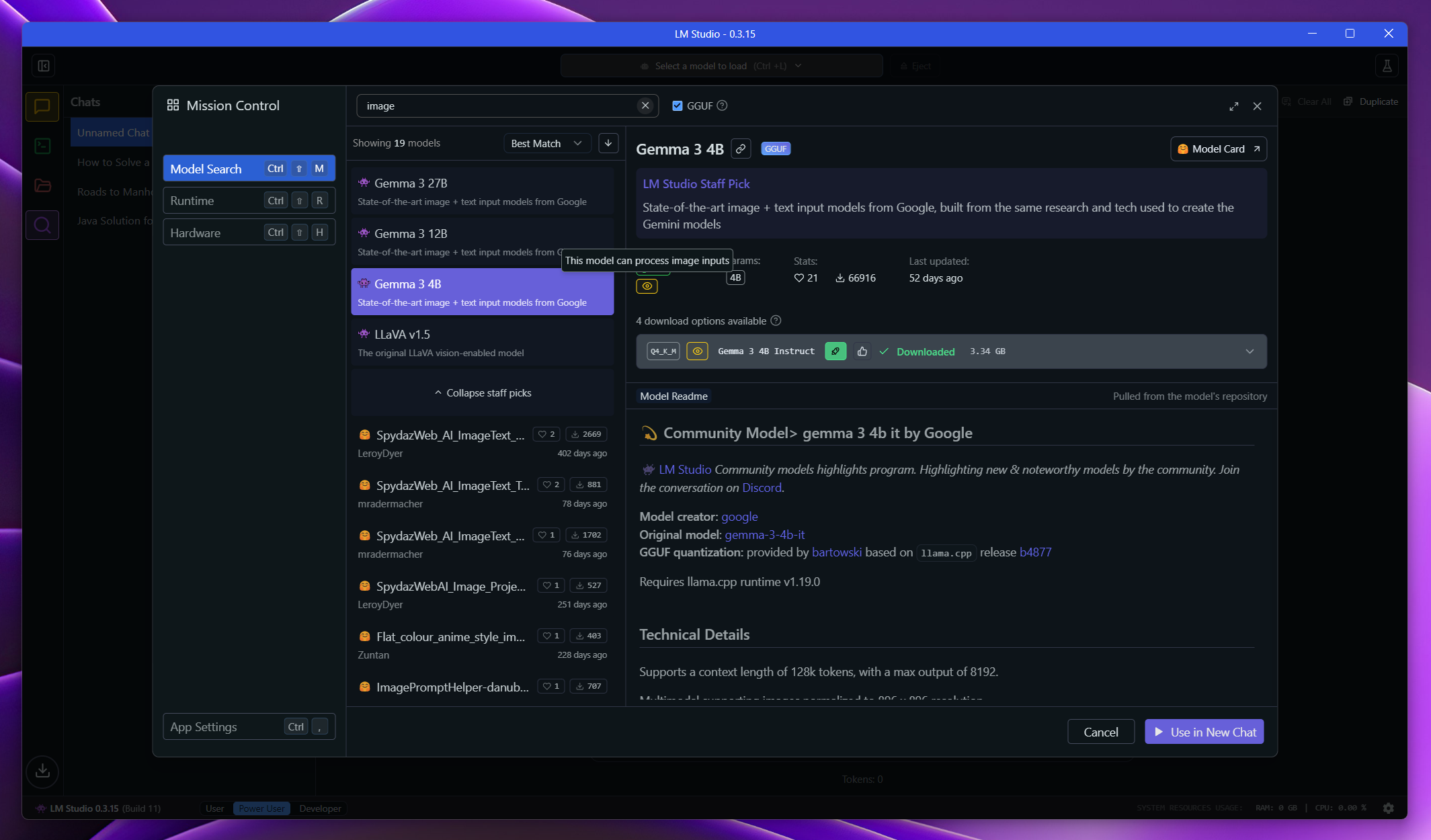

After sucesfull installation you will need to pick up a model. For our use case we need a model which is capeable of processing images. Here in this demo we will use the gemma-3-4b-it model which is just about 3.3GB in size. Of course this model is also available for Ollama.

Of course you don't have to use this specific model, any language model that is publicly available and that allows image processing (note: this does not mean image generation!) can be used.

In LM Studio, just click on the "discover" Button and search for image processing models:

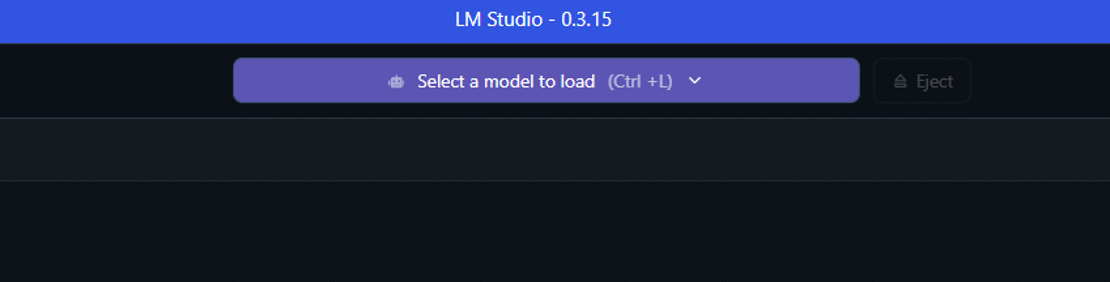

Now you need to start the downloaded model by selecting it in LM Studio. Be shure to use a model with a yellow icon otherwise the model wont process images (which we need for our goal).

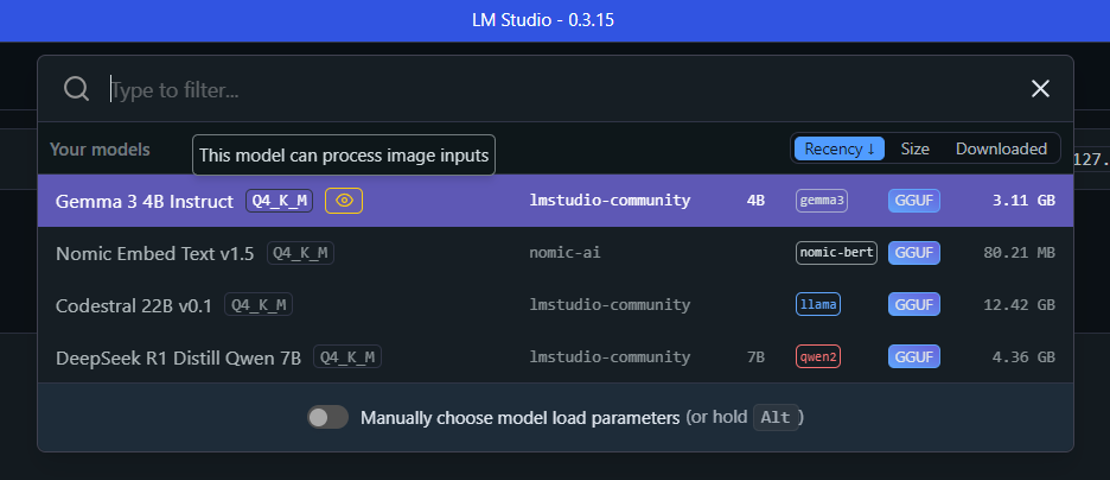

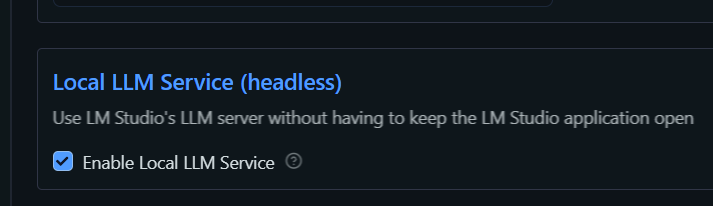

Headless LM Studio

You can run LM Studio either in the GUI mode or in a headless state where you don't need to rely on the GUI. To enable headless state just enter Settings in LM Studio and enable it. Now you can operate LM Studio from the shell just like Ollama:

To use LM Studio programatically from your own code, run LM Studio as a local server. You can turn on the server from the "Developer" tab in LM Studio UI, or via the lms command in a CLI:

lms server start

This will allow you to interact with LM Studio via an OpenAI-like REST API.

Passing Images from Code to the Language Model

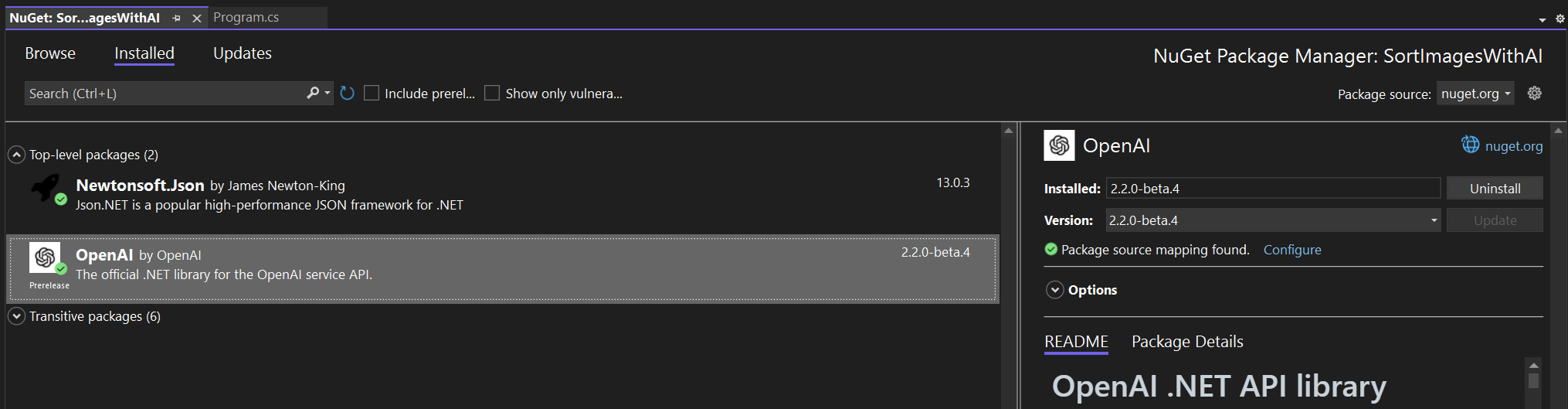

Now it's time to code! Start a new Console Application in Visual Studio and add a reference to both Newtonsoft.Json and OpenAI NuGet packages:

First, we simply give the AI an image file and ask it what there is in the picture. The code for this task looks like:

internal class Program

{

static async Task Main(string[] args)

{

try

{

string model = "gemma-3-4b-it";

string serverUrl = "http://127.0.0.1:1234/v1"; // change Url/Port according to your installation

string apiKey = "not_needed_for_lmstudio";

string pathToImage = @"E:\TEMP\Pictures\nature_wood.png";

string prompt1 = "What is in this image?";

// We create a client for our server

var client = new OpenAIClient(new ApiKeyCredential(apiKey), new OpenAIClientOptions

{

Endpoint = new Uri(serverUrl)

});

// create the chat client

var chatClient = client.GetChatClient(model);

// read-in all bytes of the image

byte[] imageBytes = File.ReadAllBytes(pathToImage);

// create the image part of the prompt

var contentPart = ChatMessageContentPart.CreateImagePart(

new BinaryData(imageBytes), "image/png");

// create the prompt

var msg = new UserChatMessage(prompt1, contentPart);

// ask the LLM

var response = await chatClient.CompleteChatAsync(msg);

// output the result

Console.ForegroundColor = ConsoleColor.Yellow;

Console.WriteLine(response.Value.Content[0].Text);

}

catch(Exception ex)

{

HandleExecption(ex);

}

Console.ResetColor();

Console.WriteLine("Done.");

}

// output the exception; TODO: logging

static void HandleExecption(Exception ex)

{

Console.ForegroundColor = ConsoleColor.Red;

Console.WriteLine("An error occured:");

Console.WriteLine(ex);

Console.ResetColor();

}

}

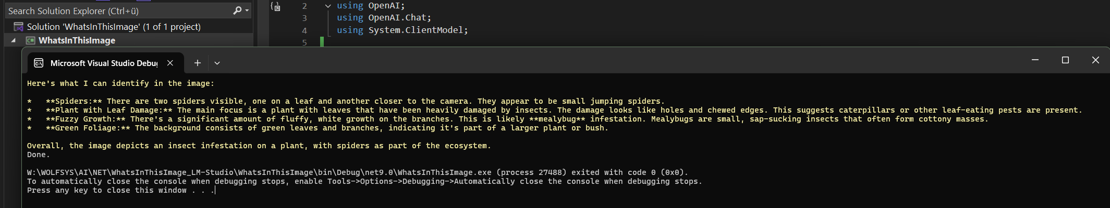

When you've setup everything right, the model will tell you something like:

Here's what I can identify in the image:

* Spiders: There are two spiders visible, one on a leaf and another closer to the camera. They appear to be small jumping spiders.

* Plant with Leaf Damage: The main focus is a plant with leaves that have been heavily damaged by insects. The damage looks like holes and chewed edges. This suggests caterpillars or other leaf-eating pests are present.

* Fuzzy Growth: There's a significant amount of fluffy, white growth on the branches. This is likely mealybug infestation. Mealybugs are small, sap-sucking insects that often form cottony masses.

* Green Foliage: The background consists of green leaves and branches, indicating it's part of a larger plant or bush.

Overall, the image depicts an insect infestation on a plant, with spiders as part of the ecosystem.

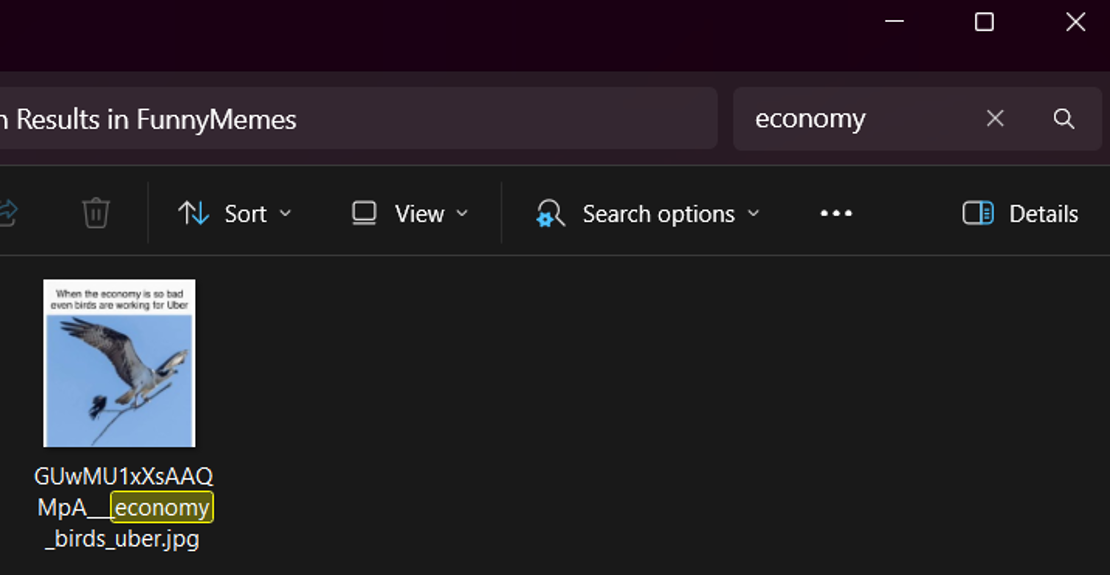

Wow, great! While the result looks promissing, it is not particularly helpful for our goal. We need to have a little more structured output, prefereable as a JSON string. And moreover, we of course need to categorize the images - after all, the image files should be copied/moved to different folders according to the category. And it would be very handy to add some tags to the filename itself so that we f.e. simply can search in Windows File Explorer.

We achieve this by rewriting the prompt accordingly:

What is this image about? Assign a maximum of 3 tags to the image, and put the image into one of the following categories: meme, article (=news reports, documentations and such stuff), screenshot (=screenshots of software and everything that is running on the OS desktop), other. Respond in JSON format with the keys description, category, and tags.

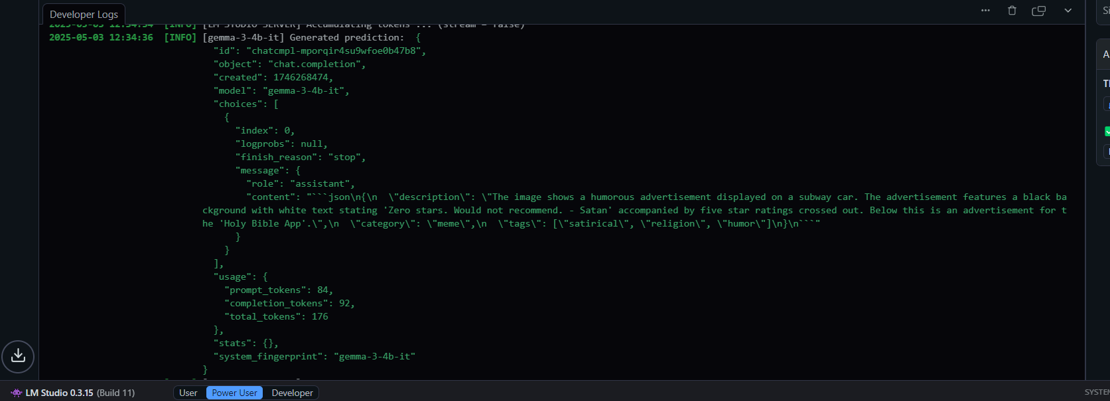

When we apply this prompt to another image (here: a meme) we can see in the "Developer" Page of LM-Studio following structured response as a JSON string:

The message/response part (=the part that is of interest for our task) adds opening and closing decorators before the actual JSON string

```json

```

which we have to remove to get the actual JSON string - String.Replace() and we're good to go. When generally dealing with AI such code decorators are very common.

Putting it all together

We now know everything we need to know in order to provide a solution for "sorting" our image files in different folders. Let's start with a class that holds all the important stuff like f.e. LM Studio configuration, file folders and so on (you can later pull that kind of infos from a config file of course, for simplification we now just hard code it 😉

namespace SortImagesWithAI

{

public static class Globals

{

// Change this constants according to your needs

// destination sub-directories within the source directory (or elsewhere, just as you like)

public const string IMAGE_DIR_DEST_ARTICLES = @"E:\TEMP\Pictures\NewsArticles";

public const string IMAGE_DIR_DEST_MEMES = @"E:\TEMP\Pictures\FunnyMemes";

public const string IMAGE_DIR_DEST_OTHER = @"E:\TEMP\Pictures\Other";

public const string IMAGE_DIR_DEST_SCRSHOTS = @"E:\TEMP\Pictures\Screenshots";

// source directory containing our images

public const string IMAGE_DIR_SOURCE = @"E:\TEMP\Pictures";

// LM Studio settings

public const string LLM_SERVER_URL = "http://127.0.0.1:1234/v1"; // change this to your LM Studio/Ollama server URL/Port

public const string LLM_API_KEY = "not_needed_for_lmstudio";

public const string LLM_MODEL = "gemma-3-4b-it";

// the user prompt

public const string USER_PROMPT = "What is this image about? Assign a maximum of 3 tags to the image, and put the image into one of the following categories: meme, article (=news reports, documentations and such stuff), screenshot (=screenshots of software and everything that is running on the OS desktop), other. Respond in JSON format with the keys description, category, and tags.";

// the image categories (don't forget to update the user prompt when adding new categories to the enumeration)

public enum ImageCategory

{

Article,

Meme,

Screenshot,

Other

}

}

}

New let's add a model for the properties (category, tags and so on) of an image file. We add a constructor that takes the model response content and sets up all of the properties we need:

using Newtonsoft.Json;

using Newtonsoft.Json.Converters;

namespace SortImagesWithAI.Model

{

public class ImageProperties

{

public string Description { get; set; }

[JsonConverter(typeof(StringEnumConverter))]

public Globals.ImageCategory Category { get; set; }

public List<string> Tags { get; set; }

public ImageProperties()

{

Description = string.Empty;

Category = Globals.ImageCategory.Other;

Tags = new List<string>();

}

public ImageProperties(string modelResponse)

{

// we need a basic validation of the model response because when dealing with LLMs

// it is not guranteed that the model always follows the prompt and returns a valid JSON string.

bool IsValidModelResponse()

{

if (!modelResponse.Contains("description"))

return false;

if (!modelResponse.Contains("category"))

return false;

if (!modelResponse.Contains("tags"))

return false;

return true;

}

if (string.IsNullOrWhiteSpace(modelResponse))

throw new ArgumentException("Model answer cannot be null or empty.", nameof(modelResponse));

else if (!IsValidModelResponse())

throw new ArgumentException("Model answer is not in the expected format.", modelResponse);

var tmpObj = JsonConvert.DeserializeObject<ImageProperties>(

modelResponse.Trim().Replace("```json", "").Replace("```", "").Trim());

if (tmpObj != null)

{

Description = tmpObj.Description;

Category = tmpObj.Category;

Tags = tmpObj.Tags;

}

}

}

}

Since we need to deserialize the string to a class which has an enum as a property we need to add a reference to Newtonsoft.Json.Converters and annotate the property Category as of type StringEnumConverter like following:

[JsonConverter(typeof(StringEnumConverter))]

public Globals.ImageCategory Category { get; set; }

For the initialization of our app we need to ensure that all the directories we will work on do exist on disc, so we add a little Helper Class called DirectoryHelper which also will return the list of images for our source directory:

namespace SortImagesWithAI.Helpers

{

/// <summary>

/// Creates the necessary destination directories for sorting/categorizing the images.

/// </summary>

internal static class DirectoryHelper

{

public static void InitDirectories()

{

if (!Directory.Exists(Globals.IMAGE_DIR_SOURCE))

throw new ArgumentException("The specified directory does not exist.",

Globals.IMAGE_DIR_SOURCE);

CreateDirectoryIfNotExists(Globals.IMAGE_DIR_DEST_ARTICLES);

CreateDirectoryIfNotExists(Globals.IMAGE_DIR_DEST_MEMES);

CreateDirectoryIfNotExists(Globals.IMAGE_DIR_DEST_OTHER);

CreateDirectoryIfNotExists(Globals.IMAGE_DIR_DEST_SCRSHOTS);

}

private static void CreateDirectoryIfNotExists(string directoryPath)

{

if (!Directory.Exists(directoryPath))

Directory.CreateDirectory(directoryPath);

}

public static List<string> GetImageFiles(string folderPath)

{

// Define the supported image file extensions

string[] imageExtensions = { "*.jpg", "*.jpeg", "*.png", "*.bmp" };

List<string> imageFiles = new List<string>();

// Loop through each extension and get the files

foreach (var extension in imageExtensions)

imageFiles.AddRange(Directory.GetFiles(folderPath, extension));

return imageFiles;

}

}

}

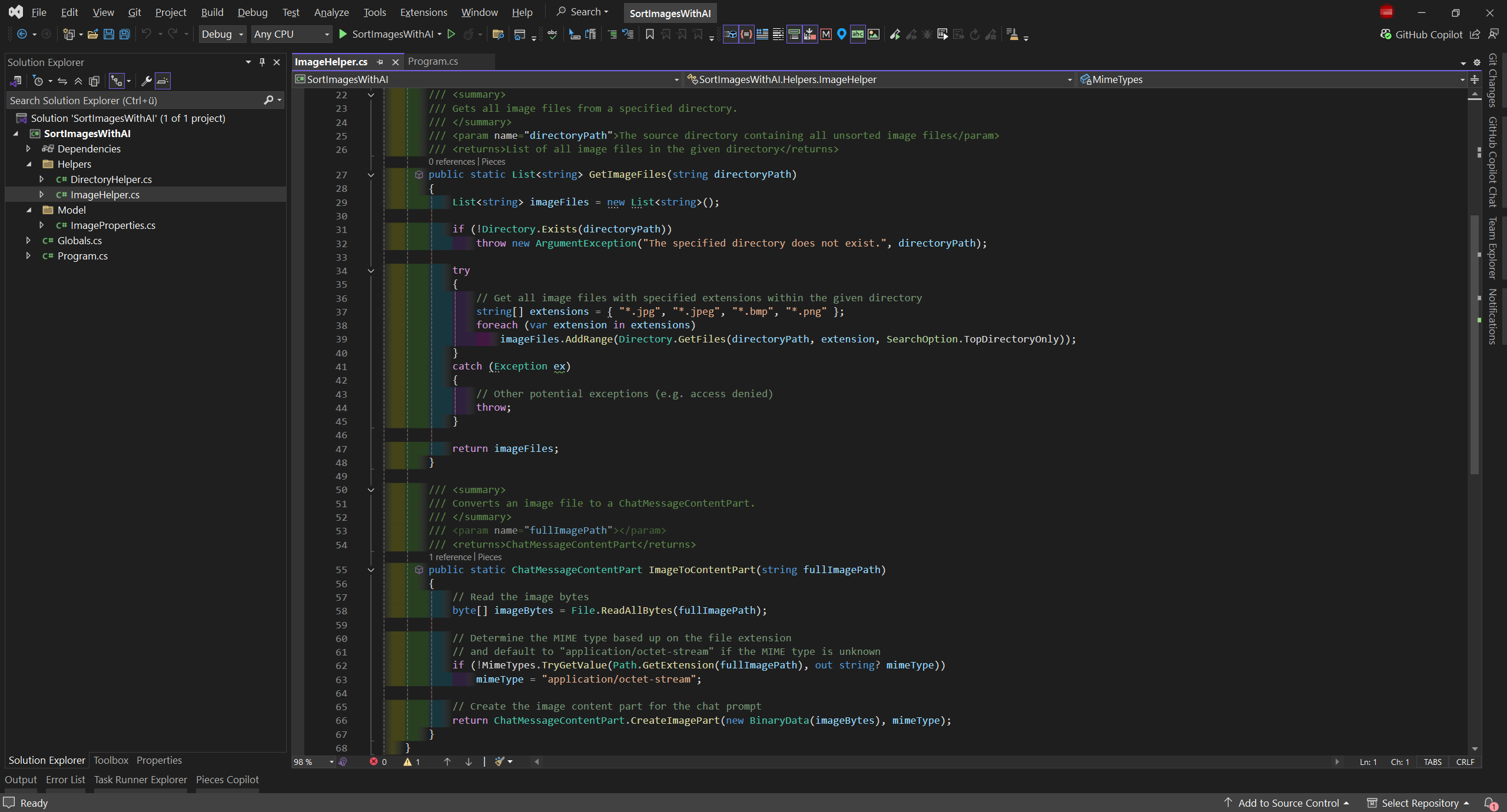

Next, we add another Helper Class named ImageHelper which will create the ChatMessageContentPart. In the method ImageToContentPart() we ensure that the correct mime type will be used by using a Dictonary.

using OpenAI.Chat;

namespace SortImagesWithAI.Helpers

{

internal static class ImageHelper

{

/// <summary>

/// mime types for the image files

/// </summary>

private static readonly Dictionary<string, string> MimeTypes =

new Dictionary<string, string>(StringComparer.OrdinalIgnoreCase)

{

{ ".jpg", "image/jpeg" },

{ ".jpeg", "image/jpeg" },

{ ".png", "image/png" },

{ ".bmp", "image/bmp" },

{ ".gif", "image/gif" },

{ ".tiff", "image/tiff" },

{ ".webp", "image/webp" }

};

/// <summary>

/// Converts an image file to a ChatMessageContentPart.

/// </summary>

public static ChatMessageContentPart ImageToContentPart(string fullImagePath)

{

// Read the image bytes

byte[] imageBytes = File.ReadAllBytes(fullImagePath);

// Determine the MIME type based up on the file extension

// and default to "application/octet-stream" if the MIME type is unknown

if (!MimeTypes.TryGetValue(Path.GetExtension(fullImagePath), out string? mimeType))

mimeType = "application/octet-stream";

// Create the image content part for the chat prompt

return ChatMessageContentPart.CreateImagePart(new BinaryData(imageBytes), mimeType);

}

}

}

Now we've got everything we need in order to loop through all the image files in the source folder and copy/move them according to the models response:

using SortImagesWithAI.Helpers;

using SortImagesWithAI.Model;

using OpenAI;

using OpenAI.Chat;

using System.ClientModel;

using System.Reflection;

namespace SortImagesWithAI

{

internal static class Program

{

static OpenAIClient? client;

static ChatClient? chatClient;

/// <summary>

/// Main entry point for the application.

/// </summary>

static async Task Main(string[] args)

{

try

{

Init();

MainFileLoop();

}

catch(Exception ex)

{

HandleExecption(ex);

}

Console.WriteLine("Done.");

}

/// <summary>

/// Performe some initializations, like creating the destination directories

/// and the LLM clients.

/// </summary>

static void Init()

{

DirectoryHelper.InitDirectories();

InitClients();

}

/// <summary>

/// Creates the LLM clients.

/// </summary>

static void InitClients()

{

// we create a client for our LLM server

client = new OpenAIClient(new ApiKeyCredential(Globals.LLM_API_KEY), new OpenAIClientOptions

{

Endpoint = new Uri(Globals.LLM_SERVER_URL)

});

// create chat client based up on that LLM client

chatClient = client.GetChatClient(Globals.LLM_MODEL);

}

/// <summary>

/// Main loop that iterates over all image files in the source directory,

/// analyzes them with the LLM, and copies each file to its destination sub folders.

/// </summary>

static void MainFileLoop()

{

foreach (var imgFile in DirectoryHelper.GetImageFiles(Globals.IMAGE_DIR_SOURCE))

{

try

{

// image to prompt

var contentPart = ImageHelper.ImageToContentPart(imgFile);

// create the prompt

var msg = new UserChatMessage(Globals.USER_PROMPT, contentPart);

// ask the LLM about the current image

var response = chatClient.CompleteChatAsync(msg).Result;

// create the image properties object based up on the response

var props = new ImageProperties(response.Value.Content[0].Text);

// show us the properties of the current image

ShowDetails(imgFile, props);

// copy|move the image to the destination directory

CopyFile(imgFile, props);

}

catch (Exception ex)

{

// it's not best practice to create a try...catch block in a loop,

// but since LLMs dont work deterministically, we need to catch exceptions

// and continue with the next image

HandleExecption(ex);

}

}

}

/// <summary>

/// Copies the source file to the destination directory based on its properties

/// and adds the tags to the filename.

/// </summary>

static void CopyFile(string sourceFilePath, ImageProperties props)

{

string destDir = string.Empty;

destDir = props.Category switch

{

Globals.ImageCategory.Article => Globals.IMAGE_DIR_DEST_ARTICLES,

Globals.ImageCategory.Meme => Globals.IMAGE_DIR_DEST_MEMES,

Globals.ImageCategory.Screenshot => Globals.IMAGE_DIR_DEST_SCRSHOTS,

_ => Globals.IMAGE_DIR_DEST_OTHER,

};

string destFileNa = Path.GetFileNameWithoutExtension(sourceFilePath);

// add each tag to the destination filename and convert empty spaces

// to dashes (f.e. when the LLM creates a tag like "rental cost")

if (props.Tags.Count > 0)

{

destFileNa += "__";

foreach (var tag in props.Tags)

destFileNa += $"_{tag.ToLower().Replace(" ", "-")}";

}

// don't forget the file extension

destFileNa += Path.GetExtension(sourceFilePath);

// Hint: We just copy the files here. In case you really wont to move them,

// just use File.Move() instead of File.Copy()

string destFilePath = Path.Combine(destDir, destFileNa);

File.Copy(sourceFilePath, destFilePath, true);

}

/// <summary>

/// Prints infos for the current file in the loop to the console.

/// </summary>>

static void ShowDetails(string nameOfFile, ImageProperties props)

{

if (props != null)

{

Console.WriteLine($"Image: {nameOfFile}");

PrintProperties(props);

Console.WriteLine();

}

}

/// <summary>

/// Prints the properties & their values of the given object to the console

/// via .NET reflection.

/// </summary>

static void PrintProperties(object obj)

{

Type type = obj.GetType();

PropertyInfo[] properties = type.GetProperties();

foreach (PropertyInfo property in properties)

{

object value = property.GetValue(obj);

if (property.PropertyType.IsEnum)

{

Console.WriteLine($"{property.Name}: {Enum.GetName(property.PropertyType, value)}");

}

else if (property.PropertyType == typeof(List<string>))

{

var list = value as List<string>;

Console.Write($"{property.Name}:");

foreach (var item in list)

{

Console.Write($" {item}");

}

Console.WriteLine();

}

else

{

// For other types, print the value directly

// (for real generic use this would be a bit more complex)

Console.WriteLine($"{property.Name}: {value}");

}

}

}

/// <summary>

/// Prints the exception details; TODO: logging

/// </summary>

static void HandleExecption(Exception ex)

{

Console.ForegroundColor = ConsoleColor.Red;

Console.WriteLine("An error occured:");

Console.WriteLine(ex);

Console.ResetColor();

}

}

}

download Sample Source Code

(Visual Studio 2022 Solution targeting .NET 9)

Final thoughts

Based up on this code you could write a Backend that analyzes your complete picture collection which has grown over the years. Simply loop through all of your images and write the properties like description, category and tags to a (local) database. This way you have labeled all your images without ever using a single keystroke once! Then build a slick Frontend (in whatever tech stack you like) that queries this database to search your hughe picture collection in the most efficient way.

But be aware - in this example we've used the tiny gemma-3-4b-it model which is just about 3.3GB in size. While this may save disc space and computing power, the results do not necessarily have to be ideal for every image.

Let's take for example this Screenshot of Visual Studio 2022:

The model describes this image as following:

Image: E:\TEMP\Pictures\env.png

Description: A screenshot of a code editor (likely Android Studio) displaying a project structure with numerous files and folders. The interface shows various panes for navigating the codebase, including file listings, directory views, and search functionality.

Category: Screenshot

Tags: android-studio code-editor software-development

But that's not surprising at all because this model is not trained especially for code recognition. So this is not its domain, that's why it is simply guessing. On the other hand, the model was trained by Alphabet/Google. So it is not surprising that this model favors Google products over those of Microsoft 😜

According to your special needs you will have to experiment with several different models and also with fine-tuning them (=playing around with parameters like f.e. temperature).